It’s a common problem – you want to programmatically extract data about your cloud estate and wish you could query your AWS resources with SQL. The console can’t present the data you want in the way you need, and the next best option is using the AWS CLI to filter and extract pieces of information from a large block of JSON results. Either that or you’re forced to brush up your Python skills yet again and muddle together some cogent results with boto3.

This is kind of awful – for each resource you need to look up the specific CLI reference documentation, examine the data structure of the JSON response, and then piece together a very unintuitive querying format to get at what you want.

I remember spotting this neat little AQ package on GitHub in 2016 and really loved the idea of using natural SQL to extract info about my AWS estate. Sadly, as with many projects, this was abandoned immediately and a PR to improve the basic functionality (from my own feature request I’d forgotten about) has sat idle and waiting for merge for more than 3 years. Surely there must be a better way to query AWS with SQL?

Then along comes Steampipe, and it looks like everything I’d ever wanted from AQ but more. Grown out of an in-house tool, I’m immediately impressed – they’ve thought about this properly.

Firstly, it’s positioned as a generic querying framework that supports plugins from multiple providers. Where have you heard that before? In much the same way Terraform is a declarative language for resource CRUD and configuration management over existing vendor APIs, Steampipe is the same except for read-only data extraction using the SQL query language you know and love.

Utilising PostgreSQL Foreign Data Wrappers, it makes API results directly queryable in a seamless manner. You’ll know well that many APIs can be pretty slow to respond, and so Steampipe also has support for materialized views which persist results (acting as a cache) that can be re-queried in mere milliseconds.

They’ve got a tidy list of supported vendor plugins including Azure, GCP, DigitalOcean, GitHub, Slack, and for the purposes of my review, AWS!

Installation

As a filthy Windows user that likes to have my cake and eat it, I’m running Windows Linux Subsystem (WSL) and there’s an easy-to-paste command with a quick copy link. A rapid installation followed by a steampipe plugin install aws and I’m ready to roll.

Authentication either picks up AWS access keys in your environment variables (handy for those using AWS SSO who can quickly paste a time-limited set of generated credentials into their console), or with a credential profile selected by updating the AWS_PROFILE env variable.

Usage

What to query first? How can I find out what’s available?

steampipe query pops me into an interactive console with a very nice command autocomplete prompt as soon as I type the period key.

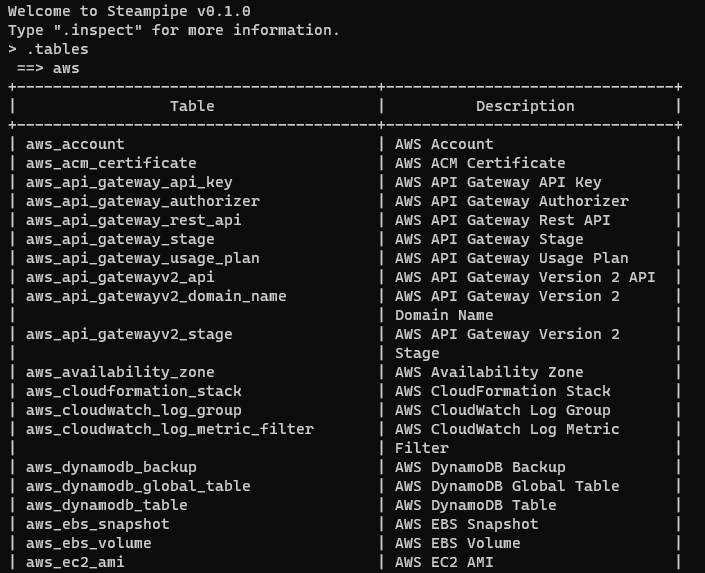

I use this to set my output format to table, and turn on query timing because I’m curious, and then inspect the available AWS tables:

OK there’s a lot of tables here – 74 at the time of writing. I know I’ve got some ACM certificates in this account, so without looking up any further documentation, I go for:

select * from aws_acm_certificate

Uh oh… No results. A quick check of the docs (which I should have read properly the first time) confirms I’m stupid – I need to set AWS_REGION as an environment variable or my credentials are no good. This is the first limitation of the tool in the 0.1.0 AWS plugin release; single region support only.

I try again and this time I get a full table result with all of my certificate details – very nice. Also very verbose, scanning way off the console screen – but the arrow keys let me move around the results to inspect the data (implementing less) – nice touch.

A Real Test

I had a very specific issue recently that I wanted to test with Steampipe. You might be interested to learn that AWS ECS in Fargate mode does not expose the running container’s Public IP in the Task Metadata. This is extremely annoying if you need your container to know what its Public IP is. You might want this in order to do something like dynamically update a Route53 record to point at the running Task. AWS PLZ FIX!

So in order to get the Public IP, I had to first look up the Private IP (which is in the metadata):

private_ip=$(curl ${ECS_CONTAINER_METADATA_URI_V4}/task | jq -r '.Containers[0].Networks[0].IPv4Addresses[0]')

And then use this result to do a separate, filtered lookup of network interfaces to see which Public IP was attached to the same interface as the Private IP:

public_ip=$(aws ec2 describe-network-interfaces --filters Name=addresses.private-ip-address,Values=$private_ip --query 'NetworkInterfaces[0].Association.PublicIp' --region $AWS_REGION --output=text)

Clunky as hell. Can I do the same in a single query with Steampipe? Turns out, no not yet, as ECS is not one of the tables currently available in the plugin. Ideally I’d like to look up the task by name or cluster/service, select the private IP, and use that as a nested WHERE query against the Public IP lookup of the network interfaces table.

But what I can do is replace my second statement with this:

select association_public_ip from aws_ec2_network_interface where private_ip_address = '$private_ip'

This makes a lot more logical sense and definitely didn’t take me more than 5 minutes to work out what the correct query syntax should be with the tricky CLI command format.

Query as a Service

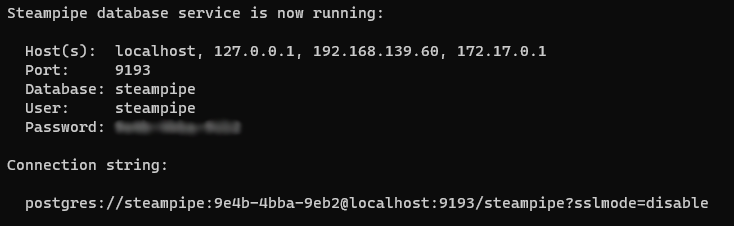

Steampipe also lets you run the tool as a service. How does it work?

Simply run

steampipe service start:

And now you’ve got a local PostgreSQL-compatible endpoint to query directly!

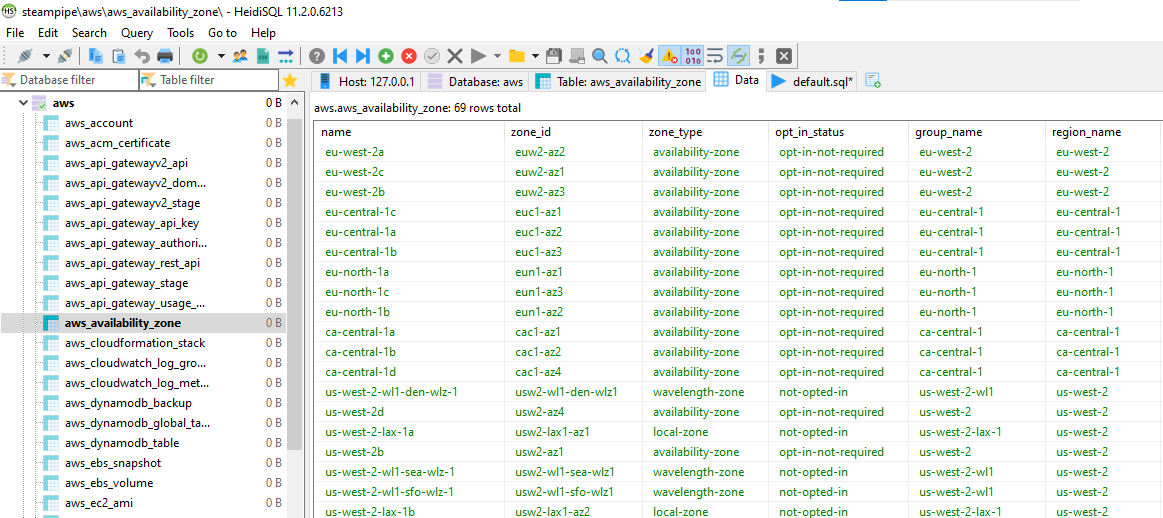

My favourite UI in Windows for SQL is HeidiSQL – a truly amazing tool that supports mySQL, PostgreSQL, SQLService, SQLite, SSH tunnelling – you name it. It’s completely free (but donations are welcome) and is diligent and continuously updated. Seriously go download it.

I connect to my localhost and here we go!

This is genuinely beautiful to look at. There’s so much data here readily available at my fingertips, and by leveraging my favourite SQL client I can luxuriously write, save, and run some really complex queries against AWS meta-data that would otherwise be very difficult to do, or would be prohibitive for a proficient DBA who hasn’t had enough AWS exposure.

By being able to comprehend AWS resources as a series of database tables, rows, and columns, it unlocks understanding to a whole new subset of engineers that might have otherwise struggled to get to grips with it. This is great!

One final example of a real world problem

Let’s say I’ve got a lot of AMIs in my AWS account and I want to do a clean-up, but I only want to delete those which aren’t in use by any running instance. How do I figure out what to delete? I need to query AWS with SQL!

Simple:

SELECT * FROM aws_ec2_ami WHERE image_id NOT IN (SELECT image_id FROM aws_ec2_instance WHERE instance_state = 'running')

And I get a list of AMIs that aren’t being used by any running instance and I know what to delete. Do you know how long it would have taken me to write the Python script to expound the logic of this simple query? FAR TOO LONG.

Lastly, you can also do cross-plugin queries. Imagine the possibilities! I could use the Slack plugin to query the Slack user ID of someone by using their GitHub email address (queried via the GitHub plugin) as a foreign key. A particular dev has failed the build? Automatically shame them directly on Slack via a quick lookup!

Just a disclaimer: This article is not sponsored, I’m just really enthusiastic about being able to interact with AWS (and other vendors) in this way. I’m impressed at the careful, professional delivery of the product and its documentation, whilst also remaining completely free.

Features I’d like to see in the future for the AWS plugin specifically:

- Multi-region support

- Additional tables/mappings to different API namespaces

- CREATE and UPDATE functionality for certain resources – this would be a BIG one, although I expect that it would be intensive effort to get good coverage. There are however many areas where Terraform has no API support, for example route53domains. If I could update domain name registrant contact details with a SQL query, this would be incredible and have many applications!

The issues page is already busy with more enhancements and bugfixes, and it would be great to see this fully expanded to its full potential.